Soul App's Open-Source Model Brings Human-like Naturalness to AI Podcasts

SHANGHAI, Oct. 30, 2025 /PRNewswire/ -- Soul AI Lab, the AI technology team behind the social platform Soul App, has officially open-sourced its voice podcast generation model, SoulX-Podcast. Designed specifically for multi-speaker, multi-turn dialogue scenarios, the model supports multiple languages and dialects, including Mandarin, English, Sichuanese, and Cantonese, as well as paralinguistic styles. It is capable of stably generating natural, fluent multi-turn voice dialogues exceeding 60 minutes in length, with accurate speaker switching and rich prosodic variation.

Beyond podcast-specific applications, SoulX-Podcast also achieves outstanding performance in general speech synthesis and voice cloning tasks, delivering a more authentic and expressive voice experience.

- Demo Page: https://soul-ailab.github.io/soulx-podcast

- Technical Report: https://arxiv.org/pdf/2510.23541

- Source Code: https://github.com/Soul-AILab/SoulX-Podcast

- Hugging Face: https://huggingface.co/collections/Soul-AILab/soulx-podcast

Key Capabilities: Fluid Multi-Turn Dialogue, Multi-Dialect Support, Ultra-Long Podcast Generation

1. Zero-Shot Cloning for Multi-Turn Dialogue: In zero-shot podcast generation scenarios, SoulX-Podcast demonstrates exceptional speech synthesis capabilities. It not only accurately reproduces the timbre and style of reference audio but also dynamically adapts prosody and rhythm according to the dialogue context, ensuring that every conversation is natural and rhythmically engaging. Whether in extended multi-turn dialogues or emotionally nuanced exchanges, SoulX-Podcast consistently maintains vocal coherence and authentic expression. Additionally, the model supports controllable generation of various paralinguistic elements, such as laughter and throat clearing, enhancing the immediacy and expressiveness of synthesized speech.

2. Multi-Lingual and Cross-Dialect Voice Cloning: In addition to Mandarin and English, SoulX-Podcast supports several major Chinese dialects, including Sichuanese, Henanese, and Cantonese. More notably, the model achieves cross-dialect voice cloning — even when provided only with a Mandarin reference speech, it can flexibly generate natural speech featuring the phonetic characteristics of these target dialects.

3. Ultra-Long Podcast Generation: SoulX-Podcast supports the generation of ultra-long podcasts while consistently maintaining stable timbre and style throughout.

Collaborative Exploration: Expanding Possibilities for AI and Social Interaction

Although recent open-source research has begun to explore multi-speaker, multi-turn speech synthesis for podcast and dialogue scenarios, existing work remains largely confined to Mandarin and English, offering limited support for widely used Chinese dialects such as Cantonese, Sichuanese, and Henanese. Furthermore, in multi-turn voice dialogues, appropriate paralinguistic expressions, such as sighs, breaths, and laughter, are essential for enhancing vividness and naturalness, yet these nuances remain underexplored in current models.

SoulX-Podcast is designed to address these very gaps. By integrating support for extended multi-speaker dialogues, comprehensive dialect coverage, and controllable paralinguistic generation, the model brings synthesized podcast speech closer to real-world communication, making it more expressive, engaging, and immersive for listeners.

The overall architecture of SoulX-Podcast adopts the widely-used "LLM + Flow Matching" paradigm for speech generation, where the LLM models semantic tokens and the flow matching module further models acoustic features. For semantic token modeling, SoulX-Podcast is built upon the Qwen3-1.7B foundation model, initialized with its original parameters to fully leverage its robust language understanding capabilities.

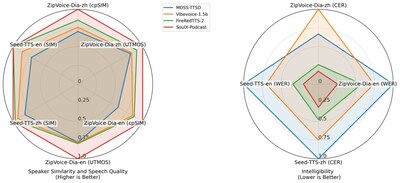

Although SoulX-Podcast is specifically designed for multi-speaker, multi-turn dialogues, it also demonstrates exceptional performance in conventional single-speaker speech synthesis and zero-shot voice cloning tasks. In podcast generation benchmarks, the model achieves top-tier results in both speech intelligibility and speaker similarity compared to recent related works.

The open-source release of SoulX-Podcast marks a significant milestone in Soul's engagement with the open-source community. The Soul AI technology team has announced plans to continue enhancing core interactive capabilities, including conversational speech synthesis, full-duplex voice calls, human-like expressiveness, and visual interaction, and to accelerate the integration of these technologies across diverse application scenarios. The ultimate goal is to deliver more immersive, intelligent, and emotionally resonant experiences that foster user well-being and a stronger sense of belonging.

![]() View original content to download multimedia:https://www.prnewswire.com/apac/news-releases/soul-apps-open-source-model-brings-human-like-naturalness-to-ai-podcasts-302599469.html

View original content to download multimedia:https://www.prnewswire.com/apac/news-releases/soul-apps-open-source-model-brings-human-like-naturalness-to-ai-podcasts-302599469.html

SOURCE Soul App