TARS Unveils World's First Scalable Real-World Multimodal Dataset for Embodied Intelligence, Opening Access in December 2025

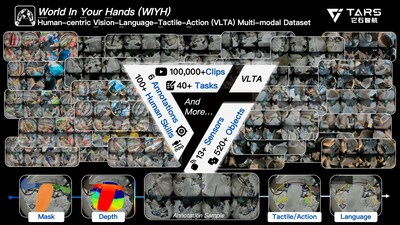

SHANGHAI, Oct. 16, 2025 /PRNewswire/ -- Lanchi Ventures-backed TARS Robotics, an AI-driven embodied intelligence company dedicated to delivering advanced robotic hardware, data, and model solutions, has launched World In Your Hands (WIYH), the world's first large-scale, real-world Vision-Language-Tactile-Action (VLTA) multimodal dataset designed for embodied intelligence. The release comes at a critical juncture for the industry, where the scarcity of high-quality training data has become a major bottleneck. Traditional data sources—such as inconsistent internet-sourced content and simulation data with limited real-world applicability—have long constrained progress in developing robust embodied AI systems.

Scheduled for open access in December 2025 to research institutions and industry partners, WIYH establishes TARS's pioneering Human-Centric embodied data engine paradigm. The company, founded on February 5, 2025, estimates that this approach places it approximately six months ahead of projects like Tesla's Optimus.

The development of WIYH is guided by the company's mission to build trustworthy super-embodied intelligent systems and backed by strong investor support, including a $120 millionAngel Round from investors such as Lanchi Ventures and a subsequent $122 million Angel+ Round.

Dr. Ding Wenchao, Chief Scientist of TARS, said, "The introduction of the WIYH dataset represents an industry milestone—enabling, for the first time, large-scale cross-industry and cross-task collection of visual, language, tactile, and action data from real-world environments. This foundational effort paves the way for scaling laws in future embodied foundation models."

Built on a first-person, Human-Centric data collection framework, WIYH moves beyond the constrained settings of labs or specialized data factories. Instead, it captures authentic human operational workflows across diverse sectors—including hotel laundry, supermarket assembly, and logistics operations—ensuring that the data not only overcomes traditional issues of scarcity, cost, and quality, but is also inherently grounded in real-world contexts.

The dataset is defined by four core attributes:

Authentic: Sourced from genuine embodied tasks that reflect real application scenarios.

Rich: Spans multiple industries and skill sets, supporting model transfer and generalization.

Comprehensive: Integrates fully annotated vision, language, tactile, and action data to facilitate multimodal alignment during pre-training.

Massive: Designed to match the scale of large language model (LLM) corpora, supporting long-term development in embodied intelligence.

These characteristics give rise to three key technical advantages:

Modal Integrity: Using proprietary hardware, WIYH synchronously captures visual (RGB), tactile (pressure signals), and action (finger joint pose and trajectory) data with high spatiotemporal alignment.

Advanced Annotation: In-house cloud-based foundation models generate high-precision labels—including 2D semantics, scene depth, task decomposition, object affordance, and motion trajectories—delivering rich supervisory signals for model pre-training.

Real-World Environment: By gathering data in open, non-dedicated operational settings, WIYH enhances authenticity, diversity, and generalization while significantly reducing acquisition costs compared to conventional approaches.

Rooted in the practical demands of "thousands of industries," WIYH aims to enable "one model for a thousand tasks," serving as essential infrastructure for training generalist embodied foundation models. It is set to accelerate the shift from single-task applications toward broadly capable robotic systems, laying the groundwork for the integration of embodied intelligence across global enterprises and households.

![]() View original content to download multimedia:https://www.prnewswire.com/news-releases/tars-unveils-worlds-first-scalable-real-world-multimodal-dataset-for-embodied-intelligence-opening-access-in-december-2025-302586218.html

View original content to download multimedia:https://www.prnewswire.com/news-releases/tars-unveils-worlds-first-scalable-real-world-multimodal-dataset-for-embodied-intelligence-opening-access-in-december-2025-302586218.html

SOURCE Lanchi Ventures