Z.ai Releases GLM-4.7 Designed for Real-World Development Environments, Cementing Itself as "China's OpenAI"

BEIJING, Dec. 27, 2025 /PRNewswire/ -- On December 22, Z.ai released GLM-4.7, the latest iteration of its GLM large language model family. Designed to handle multi-step tasks in production, GLM-4.7 targets development environments that involve lengthy task cycles, frequent tool use, and higher demands for stability and consistency.

Built on GLM-4.6 with a Focus on Complex Development

GLM-4.7 is step forward over GLM-4.6 with improved functions for developers. It features robust support for coding workflows, complex reasoning, and agentic-style execution, giving the model greater consistency even in long, multi-step tasks, as well as more stable behavior when interacting with external tools. For developers, this means GLM-4.7 is a reliable tool for everyday production.

The improvements extend beyond technical performance. GLM-4.7 also produces natural and engaging output for conversational, writing, and role-playing scenarios, evolving GLM towards a coherent open-source system.

Designed for Real Development Workflows

Expectations for model quality have become a central focus for developers. In addition to following prompts or plans, a model needs to call the right tools and remain consistent across long, multi-step tasks. As task cycles lengthen, even minor errors can have far-reaching impacts, driving up debugging costs and stretching delivery timelines. GLM-4.7 was trained and evaluated with these real-world constraints in mind.

In multi-language programming and terminal-based agent environments, the model shows greater stability across extended workflows. It already supports "think-then-act" execution patterns within widely used coding frameworks such as Claude Code, Cline, Roo Code, TRAE and Kilo Code, aligning more closely with how developers approach complex tasks in practice.

Z.ai evaluated GLM-4.7 on 100 real programming tasks in a Claude Code-based development environment, covering frontend, backend and instruction-following scenarios. Compared with GLM-4.6, the new model delivers clear gains in task completion rates and behavioral consistency. This reduces the need for repeated prompt adjustments and allows developers to focus more directly on delivery. Due to its excellent results, GLM-4.7 has been selected as the default model for the GLM Coding Plan.

Reliable Performance Across Tool Use and Coding Benchmarks

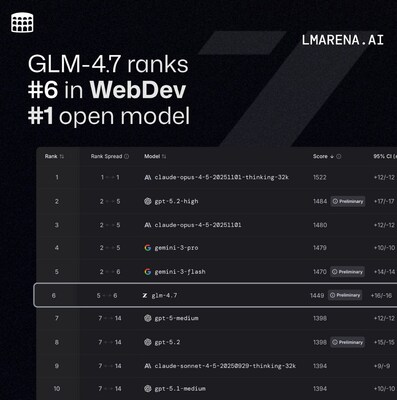

Across a range of code generation and tool use benchmarks, GLM-4.7 delivers competitive overall performance. On BrowseComp, a benchmark focused on web-based tasks, the model scores 67.5. On τ²-Bench, which evaluates interactive tool use, GLM-4.7 achieves a score of 87.4, the highest reported result among publicly available open-source models to date.

In major programming benchmarks including SWE-bench Verified, LiveCodeBench v6, and Terminal Bench 2.0, GLM-4.7 performs at or above the level of Claude Sonnet 4.5, while showing clear improvements over GLM-4.6 across multiple dimensions.

On Code Arena, a large-scale blind evaluation platform with more than one million participants, GLM-4.7 ranks first among open-source models and holds the top position among models developed in China.

More Predictable and Controllable Reasoning

GLM-4.7 introduces more fine-grained control over how the model reasons through long-running and complex tasks. As artificial intelligence systems integrate into production workflows, such capabilities have become an increasing focus for developers. GLM-4.7 is able to maintain consistency in its reasoning across multiple interactions, while also adjusting the depth of reasoning according to task complexity. This makes its behavior within agentic systems more predictable over time. Additionally, Z.ai is actively exploring new ways to deploy AI at scale as it develops and refine the GLM series.

Improvements in Front-end Generation and General Capabilities

Beyond functional correctness, GLM-4.7 shows a noticeably more mature understanding of visual structure and established front-end design conventions. In tasks such as generating web pages or presentation materials, the model tends to produce layouts with more consistent spacing, clearer hierarchy, and more coherent styling, reducing the need for manual revisions downstream.

At the same time, improvements in conversational quality and writing style have broadened the model's range of use cases. These changes make GLM-4.7 more suitable for creative and interactive applications.

Ecosystem Integration and Open Access

GLM-4.7 is available via the BigModel.cn API and is fully integrated into the Z.ai full-stack development environment. Developers and partners across the global ecosystem have already incorporated the GLM Coding Plan into their tools, including platforms such as TRAE, Cerebras, YouWare, Vercel, OpenRouter and CodeBuddy. Adoption across developer tools, infrastructure providers and application platforms suggests that GLM-4.7 is being used into wider engineering and product use.

Z.ai to Become the "World's First Large-Model Public Company"

Z.ai has announced that it aims to become the world's first publicly listed large-model company by listing on the Stock Exchange of Hong Kong. This planned IPO marks the first time capital markets will welcome a listed company whose core business is the independent development of AGI foundation models.

In 2022, 2023, and 2024, Z.ai respectively earned 57.4 million RMB (~8.2 million USD), 124.5 million RMB (~17.7 million USD), and 312.4 million RMB (~44.5 million USD) in revenue. Between 2022 and 2024, the company's compound annual revenue growth rate (CAGR) reached 130%. Revenue for the first half of 2025 was 190 million RMB (~27 million USD), marking three consecutive years of doubling revenue. During the reporting period, the company's large-model-related business was its key growth driver.

GLM-4.7 Availability

Default Model for Coding Plan: https://z.ai/subscribe

Try it now: https://chat.z.ai/

Weights: https://huggingface.co/zai-org/GLM-4.7

Technical blog: https://z.ai/blog/glm-4.7

About Z.ai

Founded in 2019, Z.ai originated from the commercialization of technological achievements at Tsinghua University. Its team are pioneers in launching large-model research in China. Leveraging its original GLM (General Language Model) pre-training architecture, Z.ai has built a full-stack model portfolio covering language, code, multimodality, and intelligent agents. Its models are compatible with more than 40 domestically produced chips, making it one of the few Chinese companies whose technical roadmap remains in step with global top-tier standards.

![]() View original content to download multimedia:https://www.prnewswire.com/news-releases/zai-releases-glm-4-7-designed-for-real-world-development-environments-cementing-itself-as-chinas-openai-302649821.html

View original content to download multimedia:https://www.prnewswire.com/news-releases/zai-releases-glm-4-7-designed-for-real-world-development-environments-cementing-itself-as-chinas-openai-302649821.html

SOURCE Z.ai